Your Personal AI College Counselor: A Deep Dive into CrewAI

by Selwyn Davidraj Posted on September 21, 2025

AI-powered research assistant that helps students discover top colleges by state and field of study, providing tailored insights and details. Built using Crew AI, the project streamlines the search process to make informed academic choices easier.

Building a Multi‑Agent College Researcher with crew.ai

I built a small but powerful multi‑agent system that researches colleges, ranks them, and produces a polished report — all by composing a few specialized agents using crew.ai. This post explains what crew.ai is, how I used it, and shows the code snippets that bring the whole flow together.

Why this project? I wanted to combine LLM reasoning with real external data sources to solve a practical problem: help students discover well‑matched colleges for a chosen US state, field of study, and program level. Seeing the agents coordinate and produce a completed report was satisfying — the project demonstrates how modular agents and tools can be stitched into a useful pipeline to solve a real world problem.

What is crew.ai?

Before we get into the project, lets take a look into crewAI. crew.ai is a framework for composing LLM‑powered agents into coordinated workflows (crews). Each agent has a role and a goal and can optionally be given tools to perform actions (web search, API calls, etc.). Crews orchestrate agents and tasks so you can build modular multi‑agent applications.

Think of a crew like a small, focused team I assemble to solve one problem. Each member of the crew — an “agent” — has a specific role and expertise (for example: researching web data, scoring candidates, or writing reports). I give each agent a clear goal and (optionally) tools they can use, such as a web-search tool or a scraper. When I run the crew, the framework orchestrates those agents and their tasks in a pipeline: agents perform their assigned work, pass results to the next step, and the crew produces the final output. This modular approach makes it easy to test, replace, or extend individual agents without rewriting the whole system.

Files to explore in this repo

src/college_researcher/crew.py— crew, agent, and task definitions.src/college_researcher/config/agents.yaml— agent roles and LLM settings.src/college_researcher/config/tasks.yaml— task wiring and dependencies.src/college_researcher/main.py— runner script to kickoff the crew.output/report.md,output/report.html,output/candidate_colleges.json— example outputs.

How I used crew.ai in this project

Agents and tasks are configured with YAML files and exposed through a small CollegeResearchCrew class that returns configured Agent and Task objects and a Crew that runs them in sequence.

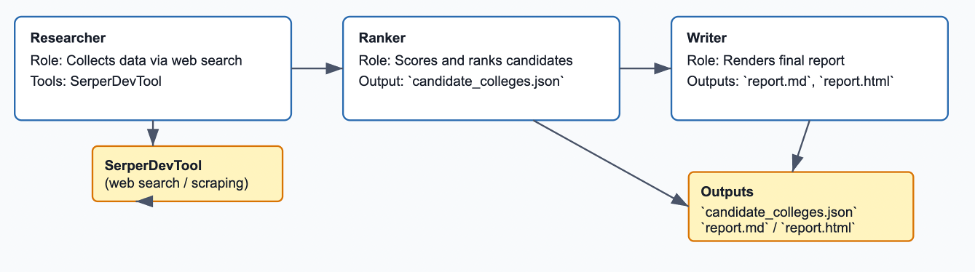

The Crew coordinates three main agents:

- Researcher — queries the web (via a search tool like Serper) to collect candidate colleges and structured data.

- Ranker — ingests the candidate list and scores colleges using affordability, outcomes, and fit heuristics.

- Writer — converts the ranked results into a readable report in Markdown/HTML.

Streamlit was used to build the UI

To make the project interactive, I built a simple Streamlit UI that lets users select a state, field of study, and program level, then runs the crew and displays the generated report. Streamlit handles the form inputs and output rendering, making it easy for anyone to use the tool without touching code.

Project in action:

A simple Streamlit UI to collect a user's research criteria

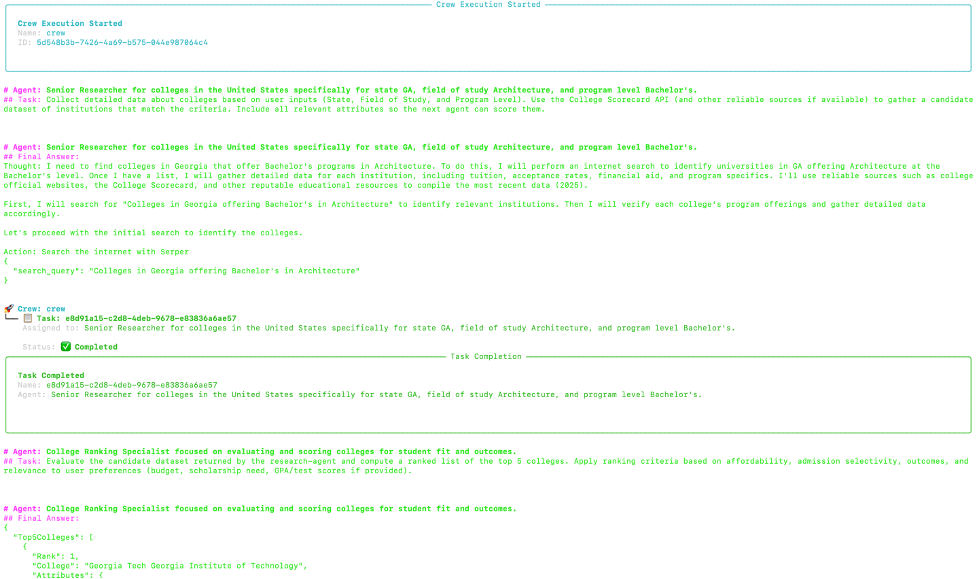

The CrewAI console showing agents performing tasks and collaborating

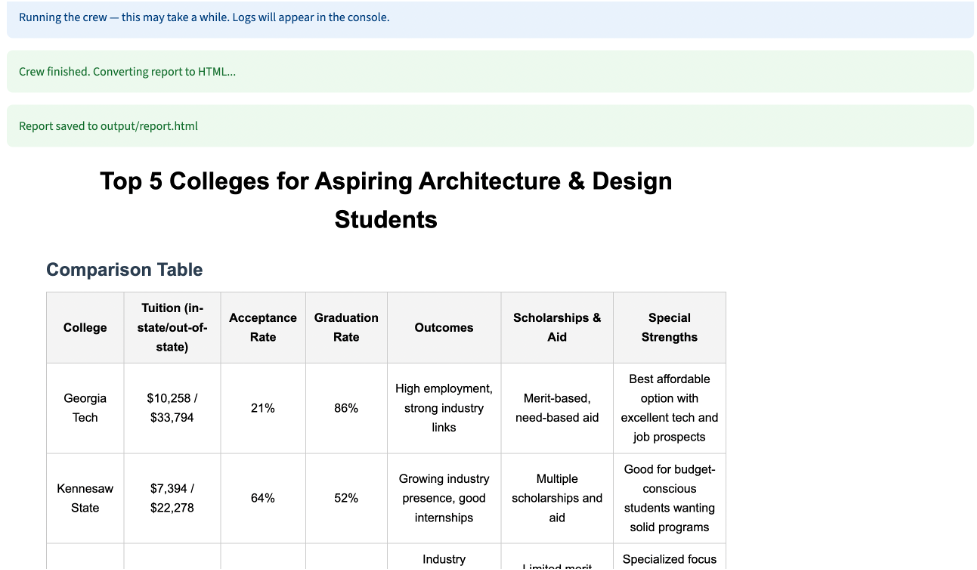

The final output—a polished, markdown-formatted report with top college recommendations

Tools and external integrations

The researcher agent uses external tools to fetch up-to-date information. In this project I used a search tool (Serper) to query the web for college details. That tool is represented as SerperDevTool in crew.py and wired into the researcher agent’s tools list. You can swap or extend tools in src/college_researcher/tools/.

If you add a custom tool, mirror the pattern in src/college_researcher/tools/custom_tool.py and ensure your environment provides any required API keys.

My experience

Building this felt like assembling a small team: the researcher scouts, the ranker analyzes, and the writer crafts the final message. Each agent focuses on a single responsibility which makes debugging and extension simple. Integrating an external search API made the results practical and grounded. The output would be optimized if we add additional tools (API to pull college data from centralized DB) and provide more context to the LLM attached to the agent.

I hope this post has sparked some ideas on how you can use crew.ai to build your own multi-agent systems. Feel free to explore the full code on the repo and try it out for yourself!

Previous article