This blog explores how to analyze text data using Python - from understanding structured vs. unstructured data to transforming raw text into meaningful vector representations. Learn practical text cleaning techniques and the fundamentals of sentiment analysis, both conceptual and lexicon-based. A perfect guide for beginners to bridge language and data through Python’s NLP capabilities.

Table of contents

- Structured and Unstructured Data

- Representing Text as Vectors

- Text Preprocessing

- Sentiment Analysis

- Lexicon-Based Approach to Sentiment Analysis

Structured and Unstructured Data

Unstructured data is everywhere — emails, social media posts, documents, and images. But why does it matter in data science, and how do we make sense of it?

What is Unstructured Data?

Unstructured data refers to information that lacks a predefined format or model. Unlike data neatly organized in tables (like spreadsheets), unstructured data appears as free-form text, images, or other formats where extracting specific features isn’t straightforward.

Examples include:

- The entire text of emails in an inbox

- Satellite images

- Transcripts of speeches from the British Parliament after 1803

Structured Data vs. Unstructured Data

Structured Data:

- Organized in rows and columns (tabular format)

- Each row = an observation (e.g., a customer or an order)

- Each column = a feature or property

Unstructured Data:

- No set organization or homogeneity

- Not immediately compatible with traditional data analysis or machine learning

Why Does Structure Matter in Data Science and Machine Learning?

Structured data meshes well with machine learning algorithms. It’s fast for computers to scan for patterns and make predictions when everything is organized.

Unstructured data, by contrast, requires cleaning and transformation before any meaningful analysis can take place.

Challenges with Unstructured Data

- Difficult to Analyze Directly:

- Lacks uniformity and predefined structure

- Requires Preprocessing:

- Must convert it into structured forms for analysis

- Needs Content Understanding:

- Extracting features or relevant patterns often involves techniques from text analysis or image processing

How Do We Structure Unstructured Data?

The process typically involves two main steps:

Convert Unstructured Data to a Structured Format

Examples:

- Extract sender, recipient, and time from email text

- Identify objects and locations from satellite imagery

Apply Machine Learning or Analytical Approaches

Example Table after Structuring Email Data:

| Sender | Recipient | Size | Attachments |

|---|---|---|---|

| alice@email.com | bob@email.com | 2 KB | file.docx |

| sue@email.com | jake@email.com | 5 KB | image.png |

Key Takeaways

- Unstructured data is abundant and comes in many forms like email text, images, or documents.

- Machine learning models require structured, tabular data to function well.

- Converting unstructured data into a structured form is a critical preprocessing step.

- The conversion approach depends on the nature of the data and the analysis goal.

Representing Text as Vectors

In this section we will discuss approaches to analyzing and structuring text data for machine learning, including the Bag of Words model, semantic methods, Bayesian spam filters, named entity identification, and n-gram analysis.

Converting Unstructured to Structured Data

Raw text (unstructured) must be transformed into structured representations so algorithms can process it.

Example:

“The government announced a new energy policy.”

From the words present, you can infer the topic.

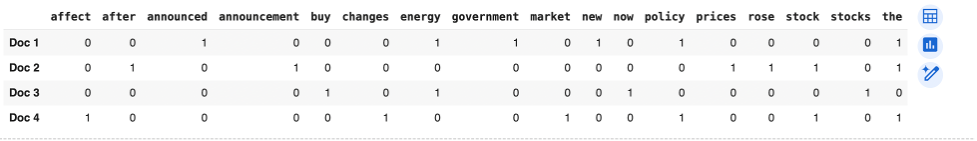

Bag of Words Model

The Bag of Words (BoW) model represents each document by the frequency of each word, regardless of order.

Key Points

- Simplifies text to numeric vectors

- Disregards grammar and word order

💻 Example Python Code

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

# Sample corpus

documents = [

"The government announced a new energy policy.",

"Stock prices rose after the announcement.",

"Buy energy stocks now!",

"Policy changes affect the stock market."

]

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(documents)

df = pd.DataFrame(X.toarray(), columns=vectorizer.get_feature_names_out())

df.index = [f'Doc {i+1}' for i in range(len(documents))]

df

Semantic Methods

Semantic methods go beyond mere word counts. They analyze:

- Grammar rules (sentence structure)

- Relationships between words (context and meaning)

While more complex and language-specific, semantic methods enable deeper understanding.

Bayesian Spam Filter

A Bayesian spam filter applies probability to word frequencies, classifying emails as spam or not spam.

Key Ideas:

- Spam emails often repeat certain words (e.g., “buy”, “stock”, “free”)

- Calculate probabilities based on word presence

Sample Data

emails = [

("Buy stocks now", "spam"),

("Stock prices rise", "ham"),

("Limited time offer", "spam"),

("Energy stocks climbing", "ham"),

("Buy now, special offer!", "spam")

]

df_emails = pd.DataFrame(emails, columns=["text", "label"])

df_emails

Simple Spam Filter Code

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.model_selection import train_test_split

# Prepare data

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(df_emails['text'])

y = df_emails['label'].map({'ham': 0, 'spam': 1})

# Train/test split

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42)

# Train model

model = MultinomialNB()

model.fit(X_train, y_train)

# Predict on a new message

test_msg = ["Buy stocks now to get profit"]

X_new = vectorizer.transform(test_msg)

prediction = model.predict(X_new)

print("Prediction:", "Spam" if prediction[0] == 1 else "Ham")

Named Entity Identification (NER)

Named Entity Recognition (NER) spots names of people, places, or organizations in text.

It helps understand the who, what, and where of documents.

💻 Example with spaCy

import spacy

# Download model if missing (uncomment if running for first time)

# import sys

# !{sys.executable} -m spacy download en_core_web_sm

nlp = spacy.load("en_core_web_sm")

doc = nlp("Barack Obama visited Berlin in July.")

for ent in doc.ents:

print(ent.text, ent.label_)

Output:

Barack Obama PERSON

Berlin GPE

July DATE

Corpus Representation

A corpus is a collection of documents. Structuring a corpus involves creating a matrix of words vs. documents, enabling machine analysis.

# DataFrame 'df' from Bag of Words section already serves as corpus representation

df

N-grams

N-grams consider sequences of words (not just single words):

- Bigram: 2-word combinations

- Trigram: 3-word combinations

This helps capture word order and context.

💻 N-grams Example

vectorizer = CountVectorizer(ngram_range=(2,2))

X_ngrams = vectorizer.fit_transform(documents)

df_ngrams = pd.DataFrame(X_ngrams.toarray(), columns=vectorizer.get_feature_names_out())

df_ngrams

Visualizing Word Frequencies

import matplotlib.pyplot as plt

word_counts = df.sum().sort_values(ascending=False)

word_counts.plot(kind='bar', figsize=(10,4), title='Word Frequencies in Sample Corpus')

plt.xlabel('Word')

plt.ylabel('Frequency')

plt.show()

Key Takeaways

- Unstructured text needs to be structured for analysis.

- Bag of Words and n-grams provide simple numeric representations.

- Semantic and Bayesian methods unlock deeper meaning and real-world tasks like spam detection.

- Named Entity Recognition (NER) adds context about what’s mentioned in text.

Text Preprocessing

Text preprocessing is the process of cleaning and transforming raw text into an analyzable format for natural language processing (NLP) and machine learning tasks. Good preprocessing simplifies data, removes noise, and improves the performance of downstream models like classifiers or clusterers.

Core Text Preprocessing Techniques

Stop-word Removal

What are stop words?

Common words such as “the”, “and”, “in”, etc., that appear frequently in text but add little meaningful information for analysis.

Benefit:

Removing these words helps algorithms focus on the content-rich portions of text, improving understanding and results.

Example:

- Original:

The quick brown fox jumps over the lazy dog. - After stop-word removal:

quick brown fox jumps lazy dog

Stemming

Definition:

Stemming reduces words to their base or root form.

Example: “playing”, “played”, and “plays” → “play”.

Why stem?

Treats different word forms as the same feature, which is helpful for text classification or clustering.

Popular Python Libraries:

- NLTK’s

PorterStemmer - Snowball Stemmer

Example:

“Chopping”, “chopped”, “will chop” → “chop”

Case Conversion

What is it?

Changing all characters (usually to lowercase) to ensure consistent comparison.

Why?

Prevents “Play” and “play” from being treated as distinct words.

Implementation Tip:

text = text.lower()

Punctuation and White Space Removal

Purpose:

Removes punctuation (“!”, “.”, “,”) and extra spaces, which typically do not add value for models like bag-of-words.

Benefit:

Ensures that tokenization (splitting text into words) is clean and consistent.

Example:

"Hello, world!" → "Hello world"

Number Removal

When to use:

In many texts, standalone numbers don’t provide useful semantic context (unless doing numerical analysis, e.g., in financial documents).

Example:

"There are 10 apples and 20 oranges." → "There are apples and oranges."

The Bag of Words Model

Once text is preprocessed, it’s commonly represented using the Bag of Words (BoW) model.

Representation:

Each document is converted into a vector containing word frequencies (counts) for every word in the vocabulary.

Why useful?

Transforms variable-length text into fixed-length, comparable vectors.

Enables algorithms to process and analyze text for similarities, classification, and clustering.

Example:

Given two documents:

- Doc 1:

“Harry likes magic.” - Doc 2:

“Magic fascinates Harry.”

BoW representation ignores grammar and order, focusing only on word occurrence counts.

Why is Text Preprocessing So Important?

Text preprocessing unlocks the power of text data by:

- Improving Model Accuracy: Cleaned text yields better, more meaningful features, leading to more accurate predictions.

- Reducing Noise: Focuses on the most important, discriminative characteristics in your data.

- Standardizing Data: Ensures consistent representation for models — from simple BoW to advanced NLP workflows.

📊 Summary Table

| Technique | Description | Example |

|---|---|---|

| Stop-word Removal | Remove common words | “the”, “and”, “is” |

| Stemming | Reduce to base form | “playing” → “play” |

| Case Conversion | Lowercase/uppercase | “Play” = “play” |

| Punctuation Removal | Remove symbols | “data, science!” → “data science” |

| Number Removal | Remove numerals | “50 apples” → “apples” |

Key Takeaways

Every NLP project benefits from thoughtful text preprocessing. By applying techniques like stop-word removal, stemming, case and punctuation normalization, and number filtering, you lay a strong foundation for insightful, accurate text analysis.

💡 Ready to experiment?

Try implementing these steps using Python libraries like NLTK, spaCy, or scikit-learn which we will be covering in detail later

Sentiment Analysis

Sentiment analysis — also known as opinion mining — is a powerful technique in data science and natural language processing (NLP) that helps organizations, researchers, and developers understand people’s attitudes, emotions, and intentions from text data.

What is Sentiment Analysis?

Sentiment analysis involves examining textual data to determine the sentiment, emotion, or intent expressed by the author.

It is used to assess how people feel (e.g., positive, negative, neutral) about a topic or entity in reviews, social media posts, emails, and more.

Types of Insights from Sentiment Analysis

- Polarity Detection: Determines if the sentiment is positive, negative, or neutral.

- Emotion Detection: Identifies emotions such as happiness, anger, sadness, or surprise.

- Intent Detection: Reveals the purpose of a message (e.g., query, suggestion, complaint).

- Aspect Detection: Pinpoints sentiment towards specific product features or aspects.

Key Applications of Sentiment Analysis

Sentiment analysis has widespread real-world utility across industries:

- Product and Service Reviews: Analyze customer feedback on Amazon, Yelp, or app stores to understand satisfaction and pain points.

- Media and Book Reviews: Gauge public opinion on movies, books, or other content.

- Early Warning for Businesses: Track shifting customer sentiment as an alert system for issues with products or services.

- Market Research: Monitor competitors and market trends by analyzing public sentiment on brands or products.

- Social Media Monitoring: Detect brand reputation and emerging crises from platforms like Twitter and Facebook.

💡 For businesses, knowing how customers feel is sometimes more valuable than knowing exactly what they say.

Approaches to Sentiment Analysis

There are two primary strategies for implementing sentiment analysis:

1️⃣ Lexicon-Based Approach

How it Works:

Utilizes a predefined dictionary (lexicon) of words, each labeled with a sentiment score (positive, negative, neutral).

Features:

- Language-specific — each language requires its own tailored lexicon.

- Easy to interpret and doesn’t require training data.

Pros:

✅ Simple, interpretable, and fast.

Cons:

❌ Limited adaptability to slang, sarcasm, or new words.

Example:

If the lexicon contains “great” (+1) and “bad” (–1), then the sentence —

“This product is great” — would be classified as positive.

2️⃣ Machine Learning-Based Approach

How it Works:

Trains a machine learning model (like logistic regression, SVM, or neural networks) on labeled datasets containing text and sentiment labels.

Pros:

✅ Adapts well to varied vocabularies and captures subtle context.

Cons:

❌ Requires labeled data and more computational resources.

Advanced Techniques:

- Deep learning (using LSTM or transformer models like BERT)

- Transfer learning with pre-trained language models

Variant – Aspect-Based Sentiment Analysis

This focuses on extracting sentiment related to specific features — for example, identifying that:

“Battery life is great but the camera is poor.”

shows positive sentiment towards the battery but negative towards the camera.

Why is Sentiment Analysis Important for Data Scientists?

- Business Impact: Enables organizations to respond proactively to customer needs.

- Research Utility: Helps social scientists gauge public mood and reactions.

- Innovation: Powers chatbots, recommendation systems, and market analysis tools.

Key Takeaways

Sentiment analysis turns the subjective world of text into actionable insights, making it invaluable across industries — from retail to politics.

Whether you’re using simple lexicons or complex machine learning models, mastering this technique equips you to extract real business value and deepen your expertise in Python-driven NLP.

Lexicon-Based Approach to Sentiment Analysis

The lexicon-based approach relies on a predefined list (or lexicon) of words, where each word is mapped to one or more sentiments — such as “positive,” “negative,” “fear,” “sadness,” etc. This process transforms a text into a “bag of words” and then replaces each word with its associated sentiment from the lexicon to infer overall emotion or polarity.

Key Steps in the Lexicon-Based Approach

1️⃣ Identify Words in the Text

Start by breaking the text (for example, a product review) into individual words.

2️⃣ Replace Words with Sentiments

For every word, retrieve its corresponding sentiment(s) from the lexicon.

3️⃣ Summarize the Sentiments

Count or aggregate how many times each sentiment appears. This provides quantitative insight into the overall feeling conveyed by the text.

Example:

A review containing words like “abandon,” “dislike,” and “terrible” would be analyzed by looking up these words in the lexicon.

For instance, the word “abandon” might be associated with sentiments such as fear, negative, and sadness.

Structure of a Sentiment Lexicon

- Size: Lexicons often contain thousands of words (some have ~14,000+ entries).

- Mapping: Each word is paired with one or more emotions or polarities.

- Example: “abandon” → [fear, negative, sadness]

Example Table

| Word | Sentiment(s) |

|---|---|

| abandon | fear, negative, sadness |

| joyful | positive, happiness |

| angry | negative, anger |

Summarizing and Reporting Sentiments

After mapping, the occurrences of each sentiment category are tallied and reported — often as counts or percentages.

This is useful for:

- Summing up a text’s overall polarity (positive, negative, neutral)

- Analyzing specific emotions expressed in a dataset

Insight:

Sentiment summaries provide a clear, quantitative view of what a text expresses — ideal for dashboards, automated monitoring, and executive reporting.

Challenges with Lexicon-Based Sentiment Analysis

Despite its simplicity, this approach has notable limitations:

1. Context Sensitivity

Words can change meaning depending on context.

Example:

- “kill” in “killing the competition” → positive

- “kill” in “the product is going to get killed” → negative

2. Ambiguity

Lexicons may not include slang, new terms, or misspellings, leading to incomplete analysis.

3. Intensity

Lexicons may not capture how strongly a sentiment is expressed (e.g., “good” vs. “excellent”).

4. Sarcasm & Irony

Standard lexicons struggle with sarcasm, cultural nuance, or idiomatic expressions.

Key Takeaway

The performance of the lexicon-based approach depends heavily on the quality, coverage, and context-awareness of the lexicon being used.

- Domain-Specific Lexicons: Use or build lexicons tailored to your specific industry or dataset.

- Preprocessing: Clean and normalize text to maximize matches with lexicon entries.

- Hybrid Approaches: Combine lexicon-based methods with machine learning for better accuracy and adaptability.

Summary : Pros vs Cons

| Pros | Cons |

|---|---|

| Simple to implement | Struggles with context and sarcasm |

| Transparent and interpretable | Relies on lexicon quality and coverage |

| Fast, no training data needed | Limited nuance, can miss new expressions |

Conclusion

The lexicon-based sentiment analysis approach is a cornerstone method for extracting emotions and polarity from text.

Its strengths in simplicity and interpretability make it ideal for transparent applications — even though it faces challenges in handling context and evolving language.

For many use cases, especially where explainability and interpretability matter, this approach remains highly valuable. Understanding its mechanics forms a foundational skill for anyone pursuing data science, NLP, or text analytics.

References

- https://machinelearningmastery.com/clean-text-machine-learning-python/

Previous article