Exploring the Latest AWS AI & ML Services: Insights from AWS AI Practitioner Training

by Selwyn Davidraj Posted on September 07, 2025

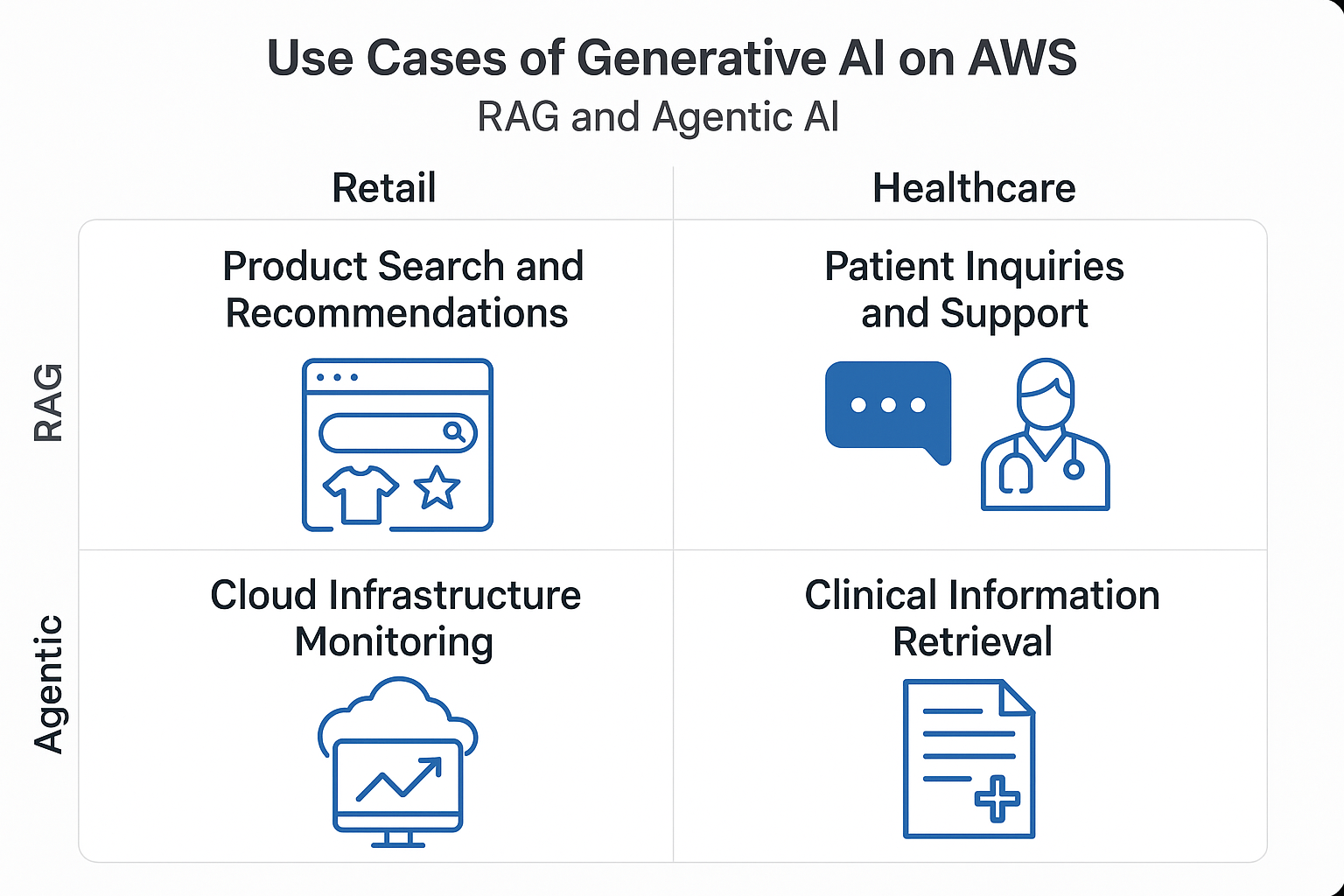

Insights from AWS AI Practitioner training on various AWS AI and ML services, showcasing how Generative AI, RAG, and Agentic AI can transform industries utilizing these services.

🚀 AWS AI Practitioner Training: My Experience & Key Takeaways

Recently, I had the opportunity to attend the AWS AI Practitioner training along with my fellow learners. It was an exhilarating dive into the latest advancements in Artificial Intelligence and Machine Learning from AWS. The session highlighted practical applications of these powerful services, especially in the areas of Generative AI, Retrieval-Augmented Generation (RAG), Agentic AI, and workflow orchestration.

In this post, I’ll share:

- 📌 Key highlights from the training

- 💡 Real-world use cases across industries

- 🔮 How I plan to leverage these services in future projects

🧩 AWS AI & ML Services at a Glance

Here’s a quick snapshot of the services we covered. While we couldn’t dive deep into every service, the two-day training provided an excellent overview.

| Service | What It Does |

|---|---|

| Amazon Bedrock | Build and scale generative AI apps using foundation models (fully managed). |

| Amazon Q | A generative AI assistant for work that can answer questions, summarize content, and take actions based on your business data. |

| Amazon SageMaker | End-to-end platform for building, training, and deploying ML models. |

| Amazon Titan Models | AWS’s proprietary foundation models for text & image generation. |

| AWS Lambda | Serverless compute to run code on events and power AI workflows. |

| Amazon Kendra | ML-powered enterprise search to retrieve precise information. |

| Amazon OpenSearch | Managed search & analytics engine for logs, monitoring, and data exploration. |

| Amazon Bedrock Agents | Automate multi-step tasks and workflows with AI-driven agents. |

| AWS Step Functions | Serverless orchestration for distributed applications & microservices. |

| Amazon Textract | Automatically extracts text, handwriting, and data from documents. |

| Amazon Lex | Build conversational interfaces (chatbots, voice bots) for applications. |

⚙️ Key Capabilities Highlighted in Training

Foundation Models and Amazon Bedrock

At the heart of modern Generative AI are Foundation Models (FMs), which are massive AI models trained on a vast amount of data. They can understand and generate human-like text, images, and other content. Amazon Bedrock acts as a fully managed service that provides secure, easy access to a diverse range of FMs from leading AI companies like Anthropic, Cohere, Meta, and Stability AI, alongside Amazon’s own Titan models. The key advantage is that it simplifies the process of building and scaling generative AI applications, as you don’t have to manage the underlying infrastructure or deal with the complexity of multiple APIs. You simply choose the model you want to use and focus on your application’s logic.

Retrieval-Augmented Generation (RAG)

While FMs are powerful, they are trained on a static dataset and can sometimes generate inaccurate or fabricated information, a phenomenon known as “hallucination.” Retrieval-Augmented Generation (RAG) is a powerful technique that mitigates this by grounding the FM’s responses in factual, external data. Before the FM generates a response, the system first retrieves relevant information from a trusted knowledge base (like an internal company document repository). This retrieved data is then used as context for the FM, ensuring the final output is accurate and directly relevant to the query. AWS facilitates RAG with services like Amazon Kendra for intelligent enterprise search and Amazon OpenSearch Service for vector search and data exploration.

Agentic AI and Workflows

Agentic AI represents a new frontier in automation, enabling AI models to autonomously perform complex, multi-step tasks. An AI agent is a system that can reason, create a plan, and execute that plan by calling external tools or APIs to achieve a defined goal. Agents for Amazon Bedrock provides a framework for building these agents, which can interact with your company’s data, invoke APIs to take actions, and complete workflows. For orchestrating these intricate, multi-step processes, AWS offers AWS Step Functions, a serverless workflow service. These agents can also be integrated with low-code platforms like n8n to build sophisticated automation without extensive coding.

Beyond Generative AI

The AWS ecosystem also provides a comprehensive suite of AI services that extend beyond generative AI. These services are essential for a wide range of tasks and are often used in combination with generative AI.

- Amazon SageMaker: This is the foundational platform for the entire machine learning lifecycle, from building and training models to deploying and managing them at scale.

- Amazon Rekognition: An image and video analysis service that can identify objects, people, text, and activities.

- Amazon Comprehend: This service uses natural language processing (NLP) to extract insights and relationships from unstructured text, such as sentiment analysis, keyphrase extraction, and entity recognition.

- Amazon Textract: A machine learning service that automatically extracts printed text, handwriting, and data from scanned documents.

- Amazon Lex: A service for building conversational interfaces for applications using voice and text, which can be the front end for many AI-powered experiences.

🌍 Real-World Use Cases

“AWS Generative AI services empower smarter, more personalized experiences across industries.”

🌟 Personal Reflections & Next Steps

Attending the AWS AI Practitioner training was a fantastic and inspiring experience. I’ve come away with countless ideas on how to integrate these powerful AI/ML services into the projects I’m passionate about, particularly in the worlds of DevOps, SRE, and cloud operations.

Highlights for me:

- 👥 Collaborating with peers and exchanging ideas

- 🛠️ Hands-on labs that showed the “art of the possible”

- 💡 Real-world use cases that sparked new project ideas

- 🎓 Preparing for the AWS Certified AI Practitioner exam

Over the next few months, I’ll be documenting my journey of building practical use cases and sharing the full implementation details here on the blog. I look forward to exploring these real-world solutions with you all.

Previous article